|

佐藤 智和 / Tomokazu Sato |

|

Japanese / English |

||

主な研究分野:

(1) コンピュータビジョン

・全方位型マルチカメラシステムの位置・姿勢推定パラメータの推定に関する研究

・空撮画像を用いた地上撮影動画像に対するカメラ位置・姿勢推定の高精度化に関する研究

・複数画像群に対するステレオ画像解析によるシーンの三次元情報の推定に関する研究

(2) 自由視点画像生成

・全方位カメラを用いた視点依存型自由視点画像生成に関する研究

・自由視点画像生成技術を用いた遠隔ロボット操縦インタフェースに関する研究

・自由視点画像を用いた車載カメラ用運転支援システムのアルゴリズム評価に関する研究

(3) 複合現実感・拡張現実感

・ランドマークデータベースを用いたカメラ位置・姿勢推定に関する研究

・事前生成型拡張現実感システムに関する研究

・RGB-Dカメラを用いた人体動作の拡張現実型再現システムに関する研究

(4) 多次元画像修復

・二次元画像の欠損修復に関する研究

・三次元形状の欠損修復に関する研究

・ディープラーニングを用いた画像修復の高品位化に関する研究

これまで実施した研究の例:

|

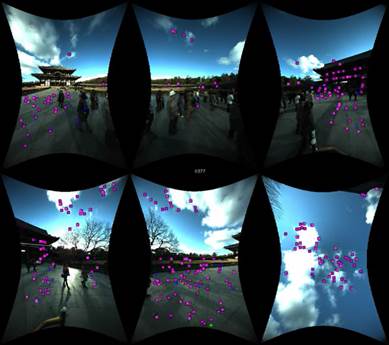

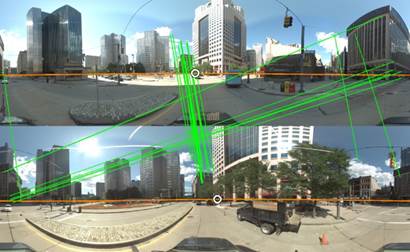

Structure

from motion for omni-directional video |

|

|

|

Abstract:

Multi-camera type of omni-directional camera has advantages of

high-resolution and almost uniform resolution for any direction of view. In

this research, an extrinsic camera parameter recovery method for a moving

omni-directional multi-camera system (OMS) is proposed. First, we discuss a

perspective n-point (PnP) problem for an OMS, and then describe a practical

method for estimating extrinsic camera parameters from multiple image

sequences obtained by an OMS. The proposed method is based on using the

shape-from-motion and the PnP techniques. T. Sato, S. Ikeda,

and N. Yokoya: "Extrinsic camera parameter

recovery from multiple image sequences captured by an omni-directional multi-camera

system", Proc. European Conf. on Computer Vision (ECCV2004), Vol. 2, pp.

326-340, May 2004. (pdf file) |

|

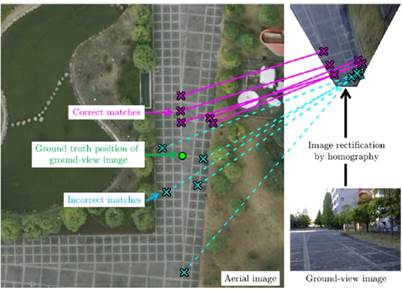

Structure

from motion using aerial images |

|

|

|

Abstract: In

this paper, a new pipeline of structure-from-motion for ground-view images is

proposed that uses feature points on an aerial image as references for

removing accumulative errors. The challenge here is to design a method for

discriminating correct matches from unreliable matches between ground-view

images and an aerial image. In order to overcome the difficulty, we employ

geometric consistency-verification of matches using the RANSAC scheme that

comprises two stages: (1) sampling-based local verification focusing on the

orientation and scale information extracted by a feature descriptor, and (2)

global verification using camera poses estimated by the bundle adjustment

using sampled matches. H. Kume, T.

Sato, and N. Yokoya: "Bundle

adjustment using aerial images with two-stage geometric verification",

Computer Vision and Image Understanding, Vol. 138, pp. 74-84, Sep. 2015. |

|

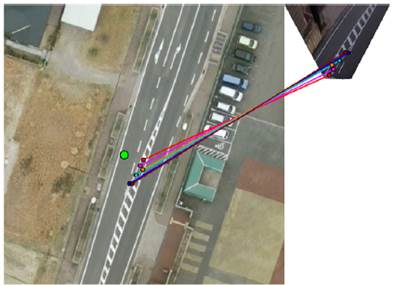

Wide-baseline

epipolar geometry estimation |

|

|

|

Abstract:

This paper presents a robust method of epipolar-geometry

estimation for omnidirectional images in wide-baseline setting, e.g. with

Google Street View images. The main idea is to introduce new geometric

constraints that are derived from the feature descriptors into the model

verification process of RANSAC. We show that these constraints provide more

reliable matches, which can be used to retrieve correct epipolar

geometry in very difficult situations. The performance of the proposed method

is demonstrated using the complete pipeline of structure-from-motion with

real dataset of Google Street View images. T. Sato, T. Pajdla, and N. Yokoya: "Epipolar geometry estimation for wide-baseline

omnidirectional street view images ", Proc. 2nd IEEE Int. Workshop on

Mobile Vision, pp. 56-63, Nov. 2011. (pdf file) |

|

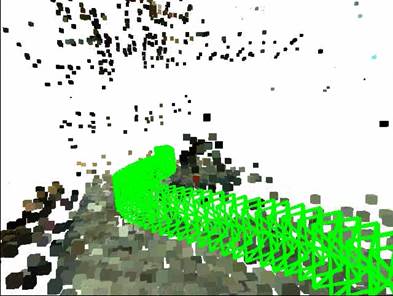

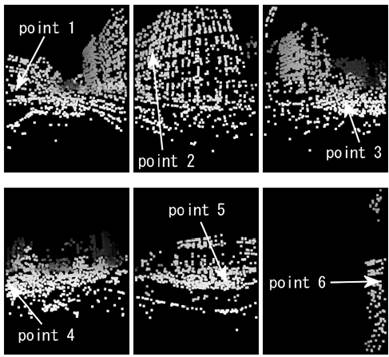

Depth estimation for omni-directional video |

|

|

|

Abstract: This paper proposes a method for estimating depth from long-baseline image sequences captured by a precalibrated moving omni-directional multi-camera system (OMS). Our idea for estimating an omni-directional depth map is very simple; only counting interest points in images is integrated with the framework of conventional multibaseline stereo. Even by a simple algorithm, depth can be determined without computing similarity measures such as SSD and NCC that have been used for traditional stereo matching. The proposed method realizes robust depth estimation against image distortions and occlusions with lower computational cost than traditional multi-baseline stereo method. These advantages of our method are fit for characteristics of omni-directional cameras.

T. Sato and N. Yokoya: "Efficient hundreds-baseline stereo by counting interest points for moving omni-directional multi-camera system", Journal of Visual Communication and Image Representation, Vol. 21, No. 5-6, pp. 416-426, July 2010. (pdf file) |

|

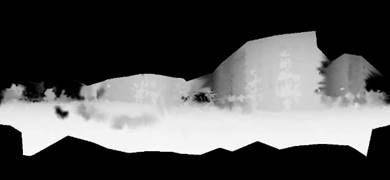

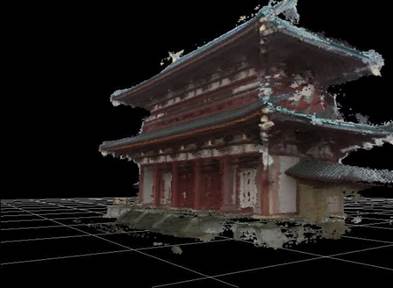

3D modeling from video images |

|

|

|

Abstract: In this paper, we propose a dense 3-D reconstruction method that first estimates extrinsic camera parameters of a hand-held video camera, and then reconstructs a dense 3-D model of a scene. In the first process, extrinsic camera parameters are estimated by tracking a small number of predefined markers of known 3-D positions and natural features automatically. Then, several hundreds dense depth maps obtained by multi-baseline stereo are combined together in a voxel space. We can acquire a dense 3-D model of the outdoor scene accurately by using several hundreds input images captured by a handheld video camera.

T. Sato, M. Kanbara, N. Yokoya, and H. Takemura: "Dense 3-D reconstruction of an outdoor scene by hundreds-baseline stereo using a hand-held video camera", International Journal of Computer Vision, Vol. 47, No. 1-3, pp. 119-129, April 2002.(pdf file) |

|

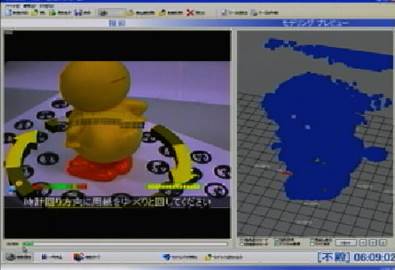

Interactive 3D modeling with AR support |

|

|

|

Abstract: In most of conventional methods, some skills for adequately controlling the camera movement are needed for users to obtain a good 3-D model. In this study, we propose an interactive 3-D modeling interface in which special skills are not required. This interface consists of “indication of camera movement” and “preview of reconstruction result.” In experiments for subjective evaluation, we verify the usefulness of the proposed 3D modeling interfaces.

K. Fudono, T. Sato, and N. Yokoya: "Interactive 3-D modeling system using a hand-held video camera", Proc. 14th Scandinavian Conf. on Image Analysis (SCIA2005), pp. 1248-1258, June 2005. (pdf file) |

|

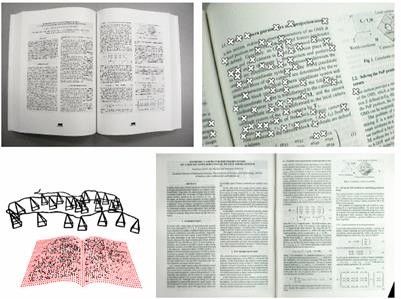

Realtime image mosaicing |

|

|

|

Abstract: This paper presents a real-time video mosaicing system that is one of practical applications of mobile vision. To achieve video mosaicing on an actual mobile device, in our method, image features are automatically tracked on the input images and 6-DOF camera motion parameters are estimated with a fast and robust structure-from-motion algorithm. A preview of generating a mosaic image is also rendered in real time to support the user. Our system is basically for the flat targets, but the system also has the capability of 3-D video mosaicing in which an unwrapped mosaic image can be generated from a video image sequence of a curved document.

T. Sato, A. Iketani, S. Ikeda, M. Kanbara, N. Nakajima, and N. Yokoya: "Mobile video mosaicing system for flat and curved documents", Proc. 1st International Workshop on Mobile Vision (IMV2006), pp. 78-92, May 2006. (pdf file) |

|

Feature-landmark based Geometric Registration |

|

|

|

Abstract: In this research, extrinsic camera parameters of video images are estimated from correspondences between pre-constructed feature-landmarks and image features. In order to achieve real-time camera parameter estimation, the number of matching candidates are reduced by using priorities of landmarks that are determined from previously captured video sequences. T. Taketomi, T. Sato, and N. Yokoya: "Real-time

and accurate extrinsic camera parameter estimation using feature landmark

database for augmented reality", Int. Journal of Computers and Graphics,

Vol. 35, No. 4, pp. 768-777, Aug. 2011. (pdf file) |

|

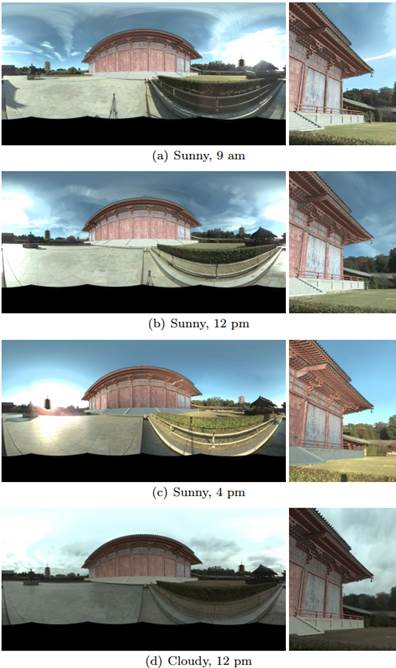

Addressing temporal inconsistency in Indirect

Augmented Reality |

|

|

|

Abstract:

Indirect augmented reality (IAR) employs a unique approach to achieve

high-quality synthesis of the real world and the virtual world, unlike

traditional augmented reality (AR). However, one drawback of IAR is the

inconsistency between the real world and the pre-captured image. In this

paper, we present a new classification of IAR inconsistencies and analyze the

effect of these inconsistencies on the IAR experience. Accordingly, we

propose a novel IAR system that reflects real-world illumination changes by

selecting an appropriate image from among multiple pre-captured images

obtained under various illumination conditions. F. Okura, T. Akaguma, T. Sato, and N. Yokoya: "Addressing temporal inconsistency in indirect augmented reality", Multimedia Tools and Applications (Online First), Jan. 2016. (pdf file) |

|

Image inpainting using energy function |

|

|

|

Abstract: Image inpainting is a technique for removing undesired visual objects in images and filling the missing regions with plausible textures. In this paper, in order to improve the image quality of completed texture, the objective function is extended by allowing brightness changes of sample textures and introducing spatial locality as an additional constraint. The effectiveness of these extensions is successfully demonstrated by applying the proposed method to one hundred images and comparing the results with those obtained by the conventional methods.

N. Kawai, T. Sato, and N. Yokoya: "Image inpainting considering brightness change and spatial locality of textures", CD-ROM Proc. Int. Conf. on Computer Vision Theory and Applications (VISAPP), Vol. 1, pp. 66-73, Jan. 2008. (pdf file) |

|

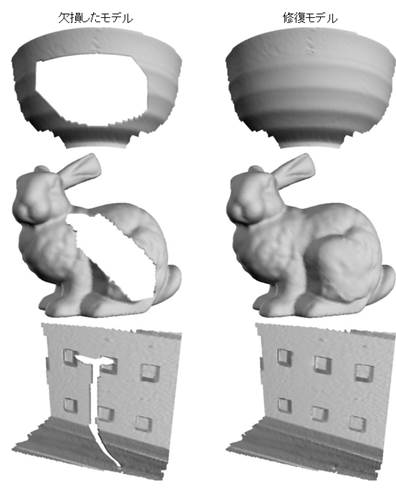

Inpainting for 3-D model |

|

|

|

Abstract: 3D mesh models generated with range scanner or video images often have holes due to many occlusions by other objects and the object itself. This paper proposes a novel method to fill the missing parts in the incomplete models. The missing parts are filled by minimizing the energy function, which is defined based on similarity of local shape between the missing region and the rest of the object. The proposed method can generate complex and consistent shapes in the missing region.

N. Kawai, T. Sato, and N. Yokoya: "Surface completion by minimizing energy based on similarity of shape", Proc. IEEE Int. Conf. on Image Processing (ICIP2008), pp. 1532-1535, Oct. 2008. (pdf file) |

|

Omnidirectional telepresence syetem |

|

|

|

Abstract: This paper describes a novel telepresence system which enables users to walk through a photorealistic virtualized environment by actual walking. To realize such a system, a wide-angle high-resolution movie is projected on an immersive multi-screen display to present users the virtualized environments and a treadmill is controlled according to detected user’s locomotion. In this study, we use an omnidirectional multi-camera system to acquire images of a real outdoor scene. The proposed system provides users with rich sense of walking in a remote site.

S. Ikeda, T. Sato, M. Kanbara, and N. Yokoya: "An immersive telepresence system with a locomotion interface using high-resolution omnidirectional movies", Proc. 17th IAPR Int. Conf. on Pattern Recognition (ICPR2004), Vol. IV, pp. 396-399, Aug. 2004. (pdf file) |

|

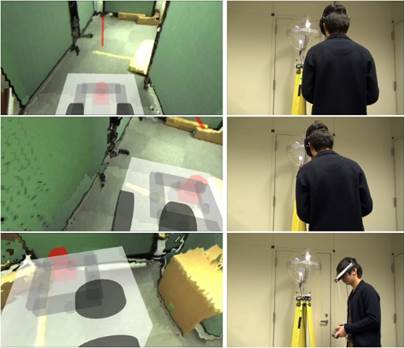

Free-viewpoint robot

teleoperation |

|

|

|

Abstract:

This paper proposes a free-viewpoint interface for mobile-robot

teleoperation, which provides viewpoints that are freely configurable by the

human operator head pose. A real-time free-viewpoint image generation method

based on view-dependent geometry and texture is employed by the interface to

synthesize the scene presented to the operator. The experiments under both

virtual and physical environments demonstrated that the proposed interface

can improve the accuracy of the robot operation compared with first- and

third-person view interfaces. F. Okura, Y. Ueda, T. Sato, and N. Yokoya: "Free-viewpoint

mobile robot teleoperation interface using view-dependent geometry and

texture", ITE Trans. on

Media Technology and Applications, Vol. 2, No. 1, pp. 82-93, Jan. 2014. (pdf file) |